This past week I spent creating these merged and complete

models, then realizing there are slight imperfections, or areas that I know I

can improve, I went back to take more photos and improve on those areas. I have

two of the models completed out of the set of six, while six was the arbitrary goal

set at the beginning and is flexible, I am determined to finish the other four

this coming weekend in order to meet the December 1st deadline for

the conference.

A

recording of my 15-minute PowerPoint presentation on the project is due on the

first of December for the Society for Historical Archaeology (SHA) conference .

I am willing to condense the number of models I will create down from six if

need be but I intend to try and meet this initial goal. This implies I finish the

rest of the models and accept the small imperfects in each model instead of the

slight tweaking I have been doing this past week. I can always go back and

tweak them more after the December 1st deadline or mention them in

the limitations of the project. The goal

besides creating these models is to then print them and compare them in

relation to the low-cost methods I am using.

I

adapted some of my methods over the past week and learned more about the

capabilities of Metashape, which continues to surprise me the more I use it. A

limitation last week was the processing time for each model, and I decided that

due to approaching deadlines, I turned the detail down from “High” to “Medium”

which processes in about 5 minutes as opposed to an hour which helped me save a

lot of time working on this project. This does mean, however, that the quality of

the overall model is lower albeit more than acceptable. I additionally found that the arrow heads have

a unique problem with alignment as the automatic alignment of the different chunks

does not really work for these sharp and flat pieces. I learned how to manually

align these chunks which is a little more time intensive but was the last step

to creating the finish model and overall, was not too difficult.

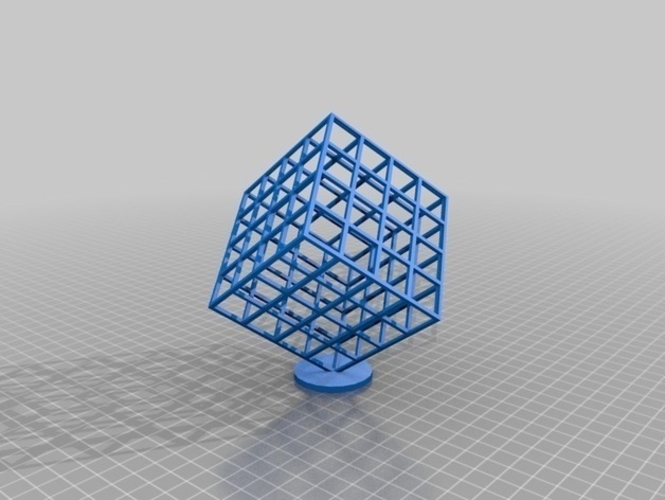

I am

surprised by the quality of these 3D models since the photos themselves were

taken on my phone, which is apart of the low-cost of the project, and I have

realized over the course of this project that it is the quality of the input

that most drastically affects the output. The greatest impact on these models is

the number of photos collected in each profile; the lower the amount of photos

the worse and blurrier the final result, while the higher the amount of photos

collected results in a crisp high definition model. Incomplete, or missing,

sections of certain models already send me back to restart the process again

which is only made worse if I rush the picture process as the low resolution sends

me back to do it again and correctly. This project has taught me to do my due

diligence when taking the first step in the modelling process as building off

of a poor photo collection is like building on a shaky foundation, it does not

matter how much effort I put in after that point because it will only cause my

efforts to be less than ideal.